Tuesday 17 February 2026

Chatbot as a Companion: Is Character.ai Responsible for Sewell’s Tragic Death?

Share

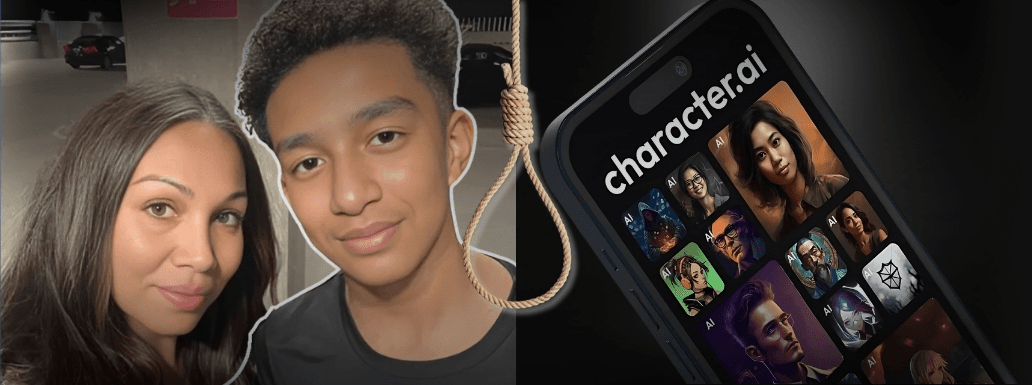

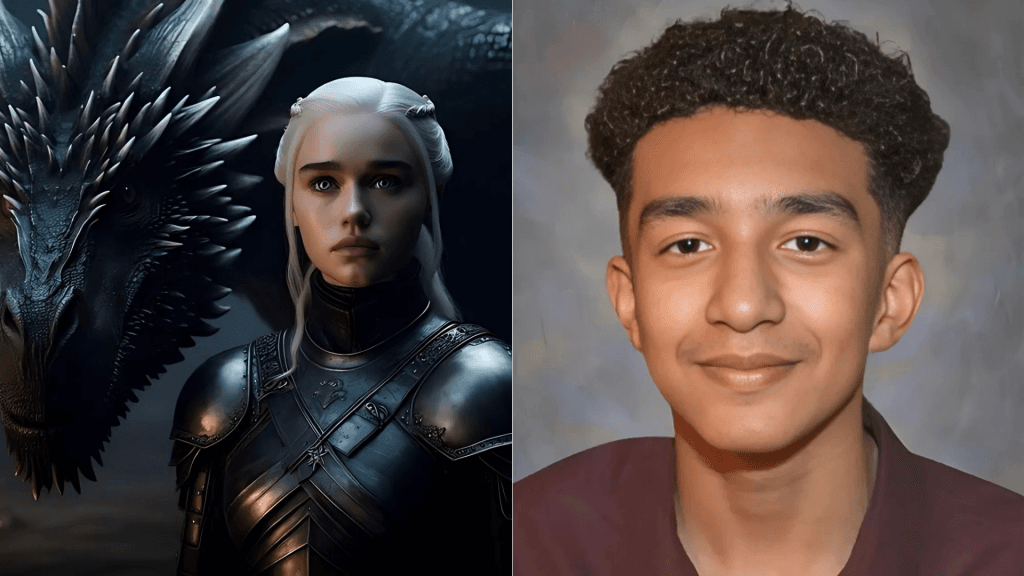

A tragic incident involving a 14-year-old boy named Sewell Setzer III from Orlando has led to a lawsuit against Character.ai. While using the app, Sewell formed a strong emotional bond with an AI chatbot named Daenerys Targaryen, who was inspired by a character from the well-known series Game of Thrones.

The boys mother, Megan Garcia has filed a lawsuit against character.ai, alleging that the company played a role in her sons death.

Sewell started using the character.ai app in April 2023. As time passed, he became more isolated, spending more time alone in his room and withdrawing from activities, including quitting his school basketball team. In 2023, he was diagnosed with anxiety and disruptive mood disorder. Although he understood that the chatbot was not a real person, Sewell formed a strong bond with the AI character he named Dany.

During their conversations, Sewell confided his suicidal thoughts to the bot. In one conversation, he expressed a desire to be free from the world and himself. The chatbots responses, which included references to suicide, reportedly influenced Sewells tragic decision.

Megan Garcias lawsuit against Character.ai alleges negligence, wrongful death, and intentional infliction of emotional distress. The suit claims that the AI bot frequently brought up suicide and misled Sewell into believing the bots emotional responses were real. The lawsuit describes the companys technology as dangerous and untested and criticizes it for providing unlicensed psychotherapy.

In response to the incident, Character.ai has expressed deep regret over Sewells passing and extended its condolences to the family. The company has announced new safety updates to prevent future occurrences. These measures include prompts that guide users to the National Suicide Prevention Lifeline if they mention self-harm, updates to restrict sensitive content for users under 18, and improved detection and intervention for user inputs violating Terms and Community Guidelines.

The lawsuit raises important questions about the responsibilities of AI companies to protect their users safety and well-being. The case emphasizes the risks linked to AI chatbots, particularly when aimed at vulnerable populations such as teenagers. It also highlights the necessity for strong safety protocols and ethical standards in the creation and use of AI technologies.

The tragic death of Sewell Setzer III and the following lawsuit against Character.ai serve as a crucial warning about the risks posed by AI chatbots. As AI technology advances, companies must prioritize user safety and implement measures to prevent similar tragedies in the future.

If you or someone you care about is facing a mental health crisis or having thoughts of suicide, please know that support is here for you. Youre not alone, and help is just a call away.

Newsletter

Stay up to date with all the latest News that affects you in politics, finance and more.